Offroad Autonomy

A full off-road autonomous navigation system capable of driving in complete darkness. From cross-modality calibration to stereo vision and traversability estimation, this project pushed the limits of off-road autonomy.

Bringing a full-scale ATV into unstructured terrain at night is not easy. At the AirLab, I’ve had the opportunity to work with an incredible team to tackle this challenge, contributing across the entire stack from sensor integration, to perception, autonomy, and field testing.

One of the first important steps in integrating new sensors is calibration. With a detailed material study to ensure visibility across modalities, we designed a custom calibration board capable of calibrating RGB (mono/stereo), thermal (mono/stereo), and multiple LiDARs. A lot of effort went into sensor drivers, time synchronization, and calibration, but these steps were crucial for achieving accurate sensor fusion and robust multi-modal autonomy.

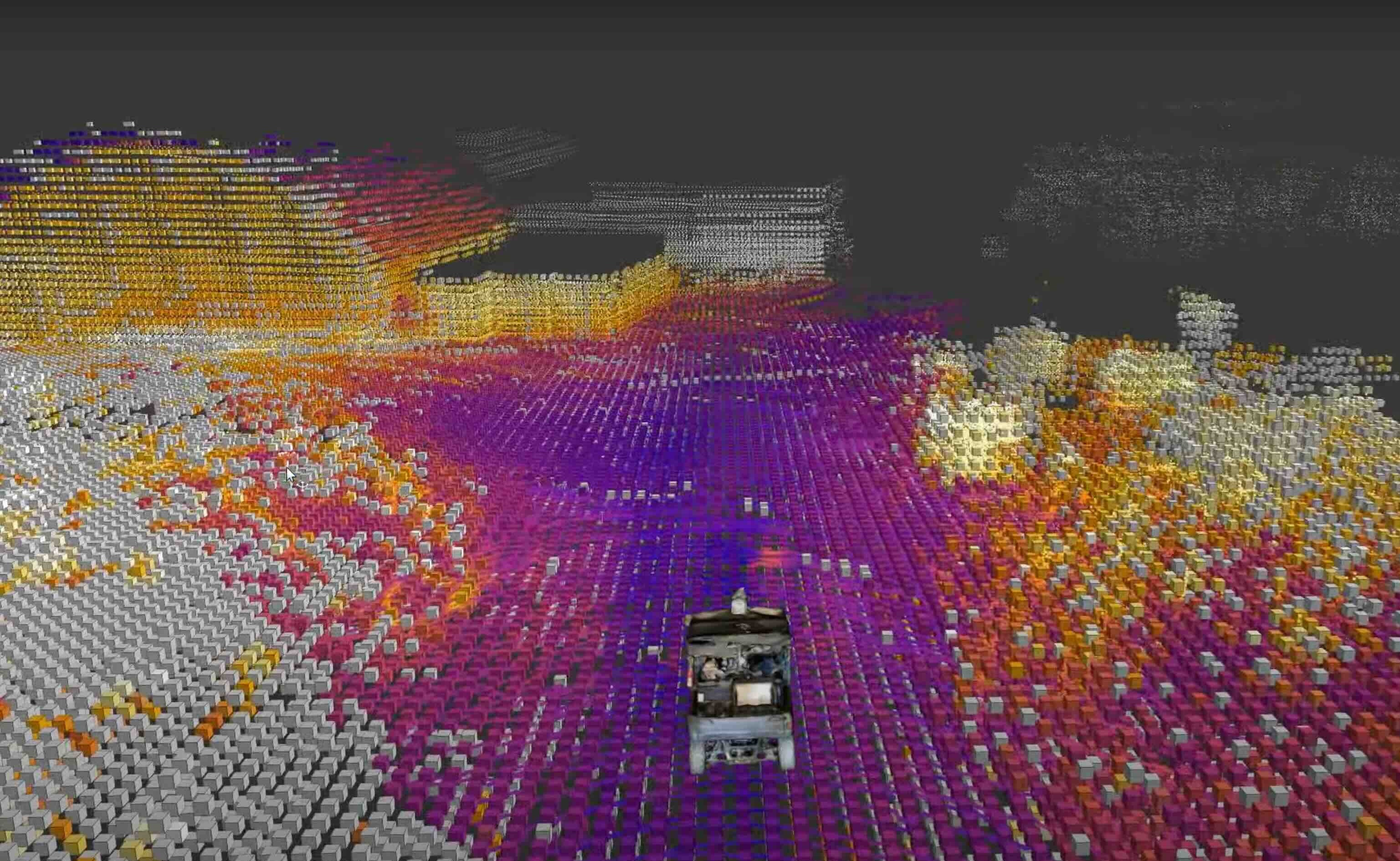

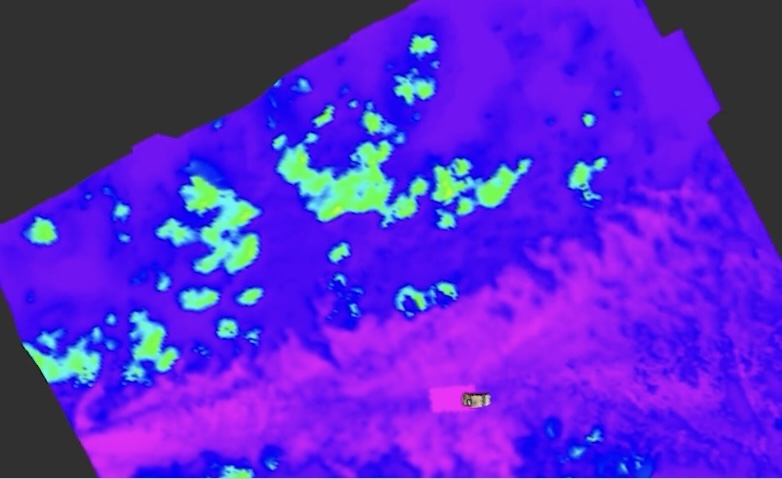

On top of the foundation, we fused thermal data with LiDAR-SLAM to enable thermal mapping. We then leverage visual foundation model (VFM) and advanced image preprocessing to extract self-supervised features, which we combined with inverse reinforcement learning to estimate traversability. This allows us to bring autonomy into conditions where visible light fails, such as nighttime and smoke-filled environments.

Looking ahead, we are working to reduce the system’s reliance on LiDAR. We are developing a stereo VIO pipeline with learned uncertainty with the goal of enabling fully passive autonomy.

For more technical details, please refer to our project website.

Media Coverage:

- CMU RI News: Taking Autonomous Driving Off-Road

- TRIB LIVE: CMU’s Tartan Driver takes autonomous driving off Pittsburgh’s beaten path

- Pittsburgh Post-Gazette: Self-driving ATVs — designed at CMU — are made to help in times of disaster

- WTRF: Carnegie Mellon University students are on the road to success with self-driving ATV